The American right is partially defined by its embracing debunked conspiracy theories such as the big lie about the 2020 election and those involving all things COVID. While some conspiracy theories are intentionally manufactured by those who know they are untrue (such as the 2020 election conspiracy theories) other theories might start by people being bad at reading things correctly. For example, consider the claim that there were microchips in the COVID vaccines because of Bill Gates.

The American right is partially defined by its embracing debunked conspiracy theories such as the big lie about the 2020 election and those involving all things COVID. While some conspiracy theories are intentionally manufactured by those who know they are untrue (such as the 2020 election conspiracy theories) other theories might start by people being bad at reading things correctly. For example, consider the claim that there were microchips in the COVID vaccines because of Bill Gates.

The Verge does a step-by-step analysis of how this conspiracy theory evolved, which is an excellent example of how conspiracy claims arise, mutate, and propagate. The simple version is this: in a chat on Reddit, Gates predicted that people would have a digital “passport” of their health records. Some Americans who attended K-12 public schools have already used a paper version of this. I have my ancient elementary school health records, which I recently consulted to confirm I had received my measles booster as a kid. As this is being written, measles has returned to my adopted state of Florida. The idea of using tattoos to mark people when they are vaccinated has also been suggested as a solution to the problem of medical records in places where record keeping is spotty or non-existent.

Bill Gate’s prediction was picked up by a Swedish website focused on biohacking which proposed using an implanted chip to store this information. This is not a new idea for biohackers or science fiction, but it was not Gate’s idea. However, the site used the untrue headline, “Bill Gates will use microchip implants to fight coronavirus.” As should surprise no one, the family tree of the conspiracy leads next to my adopted state of Florida.

Pastor Adam Fannin of Jacksonville read the post and uploaded a video to YouTube. The title is “Bill Gates – Microchip Vaccine Implants to fight Coronavirus,” which is an additional untruth on top of the untrue headline from the Swedish site. This idea spread quickly until it reached Roger Stone. The New York Post ran the headline “Roger Stone: Bill Gates may have created coronavirus to microchip people.”

Those familiar with telephone might see this as a dangerous version as each person changes the claim until it has almost no resemblance to the original. Just as with games of telephone, it is worth considering that people intentionally made changes. In the case of a game of telephone, the intent is to make the final version funny. In the case of conspiracy theories, the goal is to distort the original into the desired straw man. In the case of Bill Gates, it started out with the innocuous idea that people would have a digital copy of their health records and ended up with the claim that Bill Gates might have created the virus to put chips in people. In addition to showing how conspiracy claims can devolve from innocuous claims, this also provides an excellent example of how conspiracy theories sometimes do get it right that we should be angry at someone or something but get the reasons why we should be angry wrong.

While there is no good evidence for the conspiracy theories about Gates and microchips, it is true that we should be angry at Bill Gate’s COVID wrongdoings. Specifically, Gates used his foundation to impede access to COVID vaccines. This was not a crazy supervillain plan; it was “monopoly medicine.” As such, you should certainly loath Bill Gates for his immoral actions; but not because of the false conspiracy theories. As an aside, it is absurd that when there are so many real problems and real misdeeds to confront, conspiracy theorists spend so much energy generating and propagating imaginary problems and misdeeds. Obviously, these often serve some people very well by distracting attention from these problems. But back to the origin of conspiracy theories.

While, as noted above, people do intentionally make false claims to give birth to conspiracy theories, it also makes sense that unintentional misreading can be a factor. Having been a professor for decades, I know that people often unintentionally misread or misinterpret content.

For the most part, when professors are teaching basic and noncontroversial content, they endeavor to prove the students with a clear and correct reading or interpretation. Naturally, there can be competing interpretations and murky content in academics, but I am focusing on the clear, simple stuff where there is general agreement and little or no opposition. And, of course, no one with anything to gain from advancing another interpretation. Even in such cases, students can badly misinterpret things. To illustrate, consider this passage from the Apology:

Meletus: Intentionally, I say.

Socrates’ argument is quite clear and, of course, I go through it carefully because this argument is part of the paper for my Introduction to Philosophy class. Despite this, every class has a few students who read Socrates’ argument as him asserting that he did not corrupt the youth intentionally because they did not harm him. But Socrates does not make that claim; central to his argument is the claim that if he corrupted them, then they would probably harm him. Since he does not want to be harmed, then he either did not corrupt them or did so unintentionally. This is, of course, an easy misinterpretation to make by reading into the argument something that is not there but seems like it perhaps should or at least could be. Students are even more inclined to read Socrates as claiming that the youth will certainly harm him if he corrupts them and then build an argument around this erroneous reading. Socrates claims that the youth would be very likely to harm him if he corrupted them and so he was aware that he might not be harmed.

My point is that even when the text is clear, even when someone is actively providing the facts, even when there is no controversy, and even when there is nothing to gain by misinterpreting the text, it still occurs. And if this can occur in ideal conditions (a clear, uncontroversial text in a class), then it should be clear how easy it is for misinterpretations to arise in “the wild.” As such, a person can easily misinterpret text or content and sincerely believe they have it right—thus leading to a false claim that can give rise to a conspiracy theory. Things are much worse when a person intends to deceive. Fortunately, there is an easy defense against such mistakes: read more carefully and take the time to confirm that your interpretation is the most plausible. Unfortunately, this requires some effort and the willingness to consider that one might be wrong, which is why misinterpretations occur so easily. It is much easier to go with the first reading (or skimming) and more pleasant to simply assume one is right.

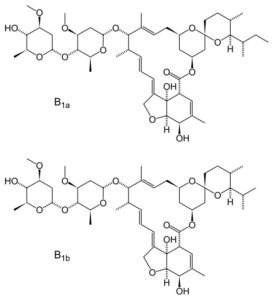

During the last pandemic, Americans who chose to forgo vaccination were hard hit by COVID. In response, some self-medicated with ivermectin. While this drug is best known as a horse de-wormer, it is also used to treat humans for a variety of conditions and many medications are used to treat conditions they were not originally intended to treat. Viagra is a famous example of this. As such, the idea of re-purposing a medication is not itself foolish. But there are obvious problems with taking ivermectin to treat COVID. T

During the last pandemic, Americans who chose to forgo vaccination were hard hit by COVID. In response, some self-medicated with ivermectin. While this drug is best known as a horse de-wormer, it is also used to treat humans for a variety of conditions and many medications are used to treat conditions they were not originally intended to treat. Viagra is a famous example of this. As such, the idea of re-purposing a medication is not itself foolish. But there are obvious problems with taking ivermectin to treat COVID. T First, while an abortion kills an entity there is good faith moral debate about whether the entity is a person. In contrast, a person who did not get vaccinated during the pandemic put those who are indisputably people at risk and, in many cases, without their choice or consent. One can, of course, argue that the aborted entity is a person and start up the anti-abortion debate. But this would have an interesting consequence.

First, while an abortion kills an entity there is good faith moral debate about whether the entity is a person. In contrast, a person who did not get vaccinated during the pandemic put those who are indisputably people at risk and, in many cases, without their choice or consent. One can, of course, argue that the aborted entity is a person and start up the anti-abortion debate. But this would have an interesting consequence. During the last pandemic, some organizations mandated vaccination against COVID-19. As another pandemic is inevitable, it is worth revisiting the moral issue of mandatory vaccination in response to a pandemic.

During the last pandemic, some organizations mandated vaccination against COVID-19. As another pandemic is inevitable, it is worth revisiting the moral issue of mandatory vaccination in response to a pandemic. It might seem like woke madness to claim that medical devices can be biased

It might seem like woke madness to claim that medical devices can be biased As a philosopher, I annoy people in many ways. One is that I almost always qualify the claims I make. This is not to weasel (weakening a claim to protect it from criticism) but because I am aware of my epistemic limitations: as Socrates said, I know that I know nothing. People often prefer claims made with certainty and see expressions of doubt as signs of weakness. Another way I annoy people is by presenting alternatives to my views and providing reasons as to why they might be right. This has a downside of complicating things and can be confusing. Because of these, people often ask me “what do you really believe!?!” I then annoy the person more by noting what I think is probably true but also insisting I can always be wrong. This is for the obvious reason that I can always be wrong. I also annoy people by adjusting my views based on credible changes in available evidence. This really annoys people: one is supposed to stick to one view and adjust the evidence to suit the belief. The origin story of COVID-19 provides an excellent example for discussing this sort of thing.

As a philosopher, I annoy people in many ways. One is that I almost always qualify the claims I make. This is not to weasel (weakening a claim to protect it from criticism) but because I am aware of my epistemic limitations: as Socrates said, I know that I know nothing. People often prefer claims made with certainty and see expressions of doubt as signs of weakness. Another way I annoy people is by presenting alternatives to my views and providing reasons as to why they might be right. This has a downside of complicating things and can be confusing. Because of these, people often ask me “what do you really believe!?!” I then annoy the person more by noting what I think is probably true but also insisting I can always be wrong. This is for the obvious reason that I can always be wrong. I also annoy people by adjusting my views based on credible changes in available evidence. This really annoys people: one is supposed to stick to one view and adjust the evidence to suit the belief. The origin story of COVID-19 provides an excellent example for discussing this sort of thing. While the wealthy did very well in the pandemic, businesses and employees were eager to get back to normal economic activity. While the vaccines were not perfect, they helped re-open the economy. As another pandemic is certainly on the way, it is worth considering the issue of vaccine mandates again.

While the wealthy did very well in the pandemic, businesses and employees were eager to get back to normal economic activity. While the vaccines were not perfect, they helped re-open the economy. As another pandemic is certainly on the way, it is worth considering the issue of vaccine mandates again. Epistemology is a branch of philosophy concerned with theories of knowledge. The name is derived from the Greek terms for episteme (knowledge) and logos (explanation).

Epistemology is a branch of philosophy concerned with theories of knowledge. The name is derived from the Greek terms for episteme (knowledge) and logos (explanation).