While people have been engaged in telework for quite some time, ever-improving technology will expand the range of jobs allowing for this long-distance labor. This raises a variety of interesting issues.

While people have been engaged in telework for quite some time, ever-improving technology will expand the range of jobs allowing for this long-distance labor. This raises a variety of interesting issues.

Some forms of telework are, by today’s standards, rather mundane and mostly non-controversial. For example, online classes are normal. Other jobs are more controversial, such as assassinating people with drones.

One promising (and problematic) area of teleworking is telemedicine. Currently, most telemedicine is primitive and mostly involves medical personal interacting with patients via video conferencing software (“take two aspirin and Zoom me in the morning”). Given that surgical robots exist, it is probably a matter of time before doctors and nurses routinely operate “doc drones” to perform medical procedures.

There are many positive aspects to such telemedicine. One is that doc drones will allow medical personnel to safely operate in dangerous areas. To use the obvious example, a doctor could use a drone to treat patients infected with Ebola while running no risk of infection. To use another example, a doctor could use a drone to treat a patient during a battle or occupation without risking being shot or blown up.

A second positive aspect is that a doc drone could be deployed in remote areas and places that have little or no local medical personnel. For example, areas in the United States that are currently underserved could be served by such doc drones. As the Trump regime devastates rural hospitals, telemedicine will become increasingly essential.

A third positive aspect is that if doc drones became cheap enough, normal citizens could have their own doc drone (with limited capabilities relative to hospital grade drones). This would allow for very rapid medical treatment. This would be especially useful given the aging populations in countries such as the United States.

There are, however, potential downsides to the use of doc drones. One is that the use of doc drones would allow companies to offshore and outsource medical jobs, just as companies have sent programing, manufacturing and technical support jobs overseas. This would allow medical businesses to employ lower paid foreign medical workers in place of higher paid local medical personnel. Such businesses could also handle worker complaints about pay or treatment simply by contracting new employees in countries that are worse off and hence have medical personal who are even more desperate. While this would be good for the bottom line, this would be problematic for local medical personnel.

It could be contended that this would be good since it would lower the cost of medical care and would also provide medical personnel in foreign countries with financial opportunities. In reply, there is obvious concern about the quality of care (one might wonder if medical care is something that should go to the lowest bidder) and the fact that medical personnel would have had better opportunities doing medicine in person. Naturally, those running the medical companies will want to ensure that the foreign medical personnel stay in their countries and this could be easily handled by getting Congress to pass tougher immigration laws, thus ensuring a ready supply of cheap medical labor.

Another promising area of telework is controlling military drones. The United States currently operates military drones but given the government’s love of contracting out services it is just a matter of time before battle drones are routinely controlled by private military contractors (or mercenaries, as they used to be called).

The main advantage of using military drones is that the human operators are out of harm’s way. An operator can also quickly shift operations as needed, which can reduce deployment times. Employing private contractors also yields numerous advantages, such as being able to operate outside the limits imposed by the laws and rules governing the military. There can also be the usual economic advantages. Imagine corporations profiting from being able to keep wages and benefits for the telesoldiers very low. There is, of course, the concern that employing foreign mercenaries might cause serious problems, but perhaps one should just think of the potential shareholder and executive profits and let the taxpayers worry about paying for any problems.

There are other areas in which teleworking would be appealing. Such areas are those that require the skills and abilities of a human and cannot simply be automated yet can be done via remote control. Teleworking would also need to be cheaper than hiring a local human to do the work. Areas such as table waiting, food preparation, and retail will most likely not see teleworkers replacing low-paid local workers. These jobs are likely to be automated. However, areas with relatively high pay could be worth the cost of converting to telework.

One obvious example is education. While the pay for American professors is relatively low and most professors are now underpaid adjuncts, there are people outside the United States who would be happy to work for even less. Running an online class, holding virtual office hours and grading work just requires a computer and an internet connection. With translation software, the education worker would not even need to know English to teach American students.

Obviously enough, since administrators would be making the decisions about whose jobs get outsourced, they would not outsource their jobs. They would remain employed. In fact, with the savings from replacing local faculty they could give themselves raises and hire more administrators. This would progress until the golden age of education is reached: campuses populated solely by well-paid administrators.

Construction, maintenance, repair and other such work might be worth converting to telework. However, this would require machines that are cheap enough to justify hiring a low paid foreign worker over a local worker. However, a work drone could be operated round the clock by shifts of operators (aside from downtime for repairs and maintenance) and there would be no vacations, worker’s compensation or other such costs. After all, the population of the entire world would be the workforce and any workers that started pushing for better pay, vacations or other benefits could be replaced by others who would be willing to work for less. If such people become difficult to find, a foreign intervention or two could set things right and create an available population of people desperate to do telework.

Large scale telework would also seem to lower the value of labor since the competition among workers would be worldwide. A person living in Maine who applied for a telejob would be up against people from Argentina to Zimbabwe. While this will be great for the job creators, it will probably be less great for the job fillers.

While this dystopian (from the perspective of the 99%) view of telework seems plausible, it is also worth considering that telework might be beneficial to the laboring masses. After all, it would open opportunities around the world and telework would require stable areas with adequate resources such as power and the internet. So companies would have an interest in building such infrastructure. As such, telework could make things better for some workers. Telework would also be relatively safe, although it could require very long hours and impose stress.

Telework can also build the foundation for full automation, replacing the human operator with AI. The conversion would only involve the cost of the AI, since it could simply interface with the existing hardware. For example, a dronedoc would go from being controlled by a human doctor to being controlled by DrGPT.

It is common practice to sequence infants to test for various conditions. From a moral standpoint, it seems obvious that these tests should be applied and expanded as rapidly as cost and technology permit (if the tests are useful, of course). The main argument is utilitarian: these tests can find dangerous, even lethal conditions that might not be otherwise noticed until it is too late. Even when such conditions cannot be cured, they can often be mitigated. As such, there would seem to be no room for debate on this matter. But, of course, there is.

It is common practice to sequence infants to test for various conditions. From a moral standpoint, it seems obvious that these tests should be applied and expanded as rapidly as cost and technology permit (if the tests are useful, of course). The main argument is utilitarian: these tests can find dangerous, even lethal conditions that might not be otherwise noticed until it is too late. Even when such conditions cannot be cured, they can often be mitigated. As such, there would seem to be no room for debate on this matter. But, of course, there is.

While exoskeletons are being developed primarily for military, medical and commercial applications, they have obvious potential for use in play. For example, new sports might be created in which athletes wear exoskeletons to enable greater performance.

While exoskeletons are being developed primarily for military, medical and commercial applications, they have obvious potential for use in play. For example, new sports might be created in which athletes wear exoskeletons to enable greater performance. An exoskeleton is a powered frame that attaches to the body to provide support and strength. The movie Live, Die Repeat: Edge of Tomorrow featured combat exoskeletons. These fictional devices allow soldiers to run faster and longer while carrying heavier loads, giving them an advantage in combat. There are also peaceful applications of technology, such as allowing people with injuries to walk and augmenting human abilities for the workplace. For those concerned with fine details of nerdiness, exoskeletons should not be confused with cybernetic parts (these fully replace body parts, such as limbs or eyes) or powered armor (like that used in the novel Starship Troopers and by Iron Man).

An exoskeleton is a powered frame that attaches to the body to provide support and strength. The movie Live, Die Repeat: Edge of Tomorrow featured combat exoskeletons. These fictional devices allow soldiers to run faster and longer while carrying heavier loads, giving them an advantage in combat. There are also peaceful applications of technology, such as allowing people with injuries to walk and augmenting human abilities for the workplace. For those concerned with fine details of nerdiness, exoskeletons should not be confused with cybernetic parts (these fully replace body parts, such as limbs or eyes) or powered armor (like that used in the novel Starship Troopers and by Iron Man). In the last essay I suggested that although a re-animation is not a person, it could be seen as a virtual person. This sort of virtual personhood can provide a foundation for a moral argument against re-animating celebrities. To make my case, I will use Kant’s arguments about the moral status of animals.

In the last essay I suggested that although a re-animation is not a person, it could be seen as a virtual person. This sort of virtual personhood can provide a foundation for a moral argument against re-animating celebrities. To make my case, I will use Kant’s arguments about the moral status of animals. Socrates, it is claimed, was critical of writing and argued that it would weaken memory. Many centuries later, it was worried that television would “rot brains” and that calculators would destroy people’s ability to do math. More recently, computers, the internet, tablets, and smartphones were supposed to damage the minds of students. The latest worry is that AI will destroy the academy by destroying the minds of students.

Socrates, it is claimed, was critical of writing and argued that it would weaken memory. Many centuries later, it was worried that television would “rot brains” and that calculators would destroy people’s ability to do math. More recently, computers, the internet, tablets, and smartphones were supposed to damage the minds of students. The latest worry is that AI will destroy the academy by destroying the minds of students. My name is Dr. Michael LaBossiere, and I am reaching out to you on behalf of the CyberPolicy Institute at Florida A&M University (FAMU). Our team of professors, who are fellows with the Institute, have developed a short survey aimed at gathering insights from professionals like yourself in the IT and healthcare sectors regarding healthcare cybersecurity.

My name is Dr. Michael LaBossiere, and I am reaching out to you on behalf of the CyberPolicy Institute at Florida A&M University (FAMU). Our team of professors, who are fellows with the Institute, have developed a short survey aimed at gathering insights from professionals like yourself in the IT and healthcare sectors regarding healthcare cybersecurity. While people have been engaged in telework for quite some time, ever-improving technology will expand the range of jobs allowing for this long-distance labor. This raises a variety of interesting issues.

While people have been engaged in telework for quite some time, ever-improving technology will expand the range of jobs allowing for this long-distance labor. This raises a variety of interesting issues.

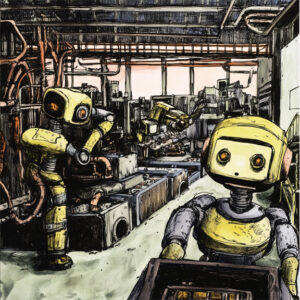

The term “robot” and the idea of a robot rebellion were introduced by Karel Capek in Rossumovi Univerzální Roboti. “Robot” is derived from the Czech term for “forced labor” which was itself based on a term for slavery. Robots and slavery are thus linked in science-fiction. This leads to a philosophical question: can a machine be a slave? Sorting this matter out requires an adequate definition of slavery followed by determining whether the definition can fit a machine.

The term “robot” and the idea of a robot rebellion were introduced by Karel Capek in Rossumovi Univerzální Roboti. “Robot” is derived from the Czech term for “forced labor” which was itself based on a term for slavery. Robots and slavery are thus linked in science-fiction. This leads to a philosophical question: can a machine be a slave? Sorting this matter out requires an adequate definition of slavery followed by determining whether the definition can fit a machine.