This essay continues the discussion of the logic of conspiracy theories. Conspiracy theorists use the same logical tools as everyone else, but they use them in different ways. In the previous essay I discussed how conspiracy theorists use the argument from authority. I will now look at the analogical argument.

This essay continues the discussion of the logic of conspiracy theories. Conspiracy theorists use the same logical tools as everyone else, but they use them in different ways. In the previous essay I discussed how conspiracy theorists use the argument from authority. I will now look at the analogical argument.

In an analogical argument you conclude that two things are alike in a certain respect because they are alike in other respects. An analogical argument usually has three premises and a conclusion. The first two premises establish the analogy by showing that the things (X and Y) being compared are similar in certain respects (properties P, Q, R, etc.). The third premise establishes that X has an additional property, Z. The conclusion asserts that Y has property Z as well. The form looks like this:

Premise 1: X has properties P,Q, and R.

Premise 2: Y has properties P,Q, and R.

Premise 3: X has property Z.

Conclusion: Y has property Z.

While one might wonder how reasoning by analogy could lead to accepting a conspiracy theory, it works very well in this role. If property Z is a feature of a conspiracy theory, such as the government harming citizens, then all that is needed to make the argument is something else with that property. Then it is easy to draw the analogy.

For example, consider an anti-vaxxer who thinks there is a conspiracy to convince people that the unsafe vaccines are safe. They could make an analogical argument comparing vaccines to what happened during the opioid epidemic. This epidemic was caused by pharmaceutical companies lying about the danger of opioids, doctors being bribed to prescribe them, pharmacies going along with the prescriptions, and the state allowing it all to happen. Looked at this way, concluding that what was true of opioids is also true of vaccines can seem reasonable. Yet, the conspiracy theory about vaccines is mistaken. So, how does one sort assess this reasoning and what mistakes do conspiracy theorists make? The answer is that there are three standards for assessing the analogical argument and conspiracy theorists don’t apply them correctly.

First, the more properties X and Y have in common, the better the argument. The more two things are alike in other ways, the more likely it is that they will be alike in a specific way. In the case of vaccines and opioids, there are many shared similarities; for example, both involve companies, doctors, pharmacies and the state.

Second, the more relevant the shared properties are to property Z, the stronger the argument. A specific property, for example P, is relevant to property Z if the presence or absence of P affects the likelihood that Z will be present. Third, it must be determined whether X and Y have relevant dissimilarities as well as similarities. The more dissimilarities and the more relevant they are, the weaker the argument.

In the case of inferring an unreal conspiracy to sell dangerous vaccines from the very real opioid conspiracy one must weigh the similarities and differences. While there are clearly relevant similarities, there are some crucial differences. Most importantly, vaccines have been extensively tested and are known to be safe. In contrast, all the scientific evidence supports common sense: opioids are addictive and potentially dangerous. While people want to make money off both, this does not entail that vaccines are not safe, even though opioids are dangerous. While the analogy between the opioid conspiracy and the vaccine conspiracy breaks down; there is nothing wrong with reasoning by analogy. If the standards are applied and relevant differences are considered, this method of reasoning is quite useful.

It is rational for conspiracy theorists to consider real cases of wrongdoing. For example, we know that governments do engage in false flag operations or lie to “justify” wars and violence. But this fact does not prove, by itself, that any specific event is a false flag or a lie. As such, the mistake make by conspiracy theorists is not arguing by analogy, but in not being careful enough in applying the standards. So they commit the fallacy of false analogy.

While details of each conspiracy theory vary, they often attribute great power and influence to a small group engaging in nefarious activities. A classic example is the idea that NASA faked the moon landings. There are also numerous “false flag” conspiracy theories ranging from the idea that the Bush administration was behind 9/11 to the idea that school shootings are faked by anti-gun Democrats. There are also various medical conspiracy theories, such as those fueling the anti-vaccination movement.

While details of each conspiracy theory vary, they often attribute great power and influence to a small group engaging in nefarious activities. A classic example is the idea that NASA faked the moon landings. There are also numerous “false flag” conspiracy theories ranging from the idea that the Bush administration was behind 9/11 to the idea that school shootings are faked by anti-gun Democrats. There are also various medical conspiracy theories, such as those fueling the anti-vaccination movement. Most Americans see overt racism as offensive and as are as likely to swallow it as they are to eat a shit cookie. But like parasites the alt right aims to reproduce by infecting healthy hosts. One way it does this is by tricking the unwary into consuming their infected shit. So how is this done?

Most Americans see overt racism as offensive and as are as likely to swallow it as they are to eat a shit cookie. But like parasites the alt right aims to reproduce by infecting healthy hosts. One way it does this is by tricking the unwary into consuming their infected shit. So how is this done? The fact that college admission is for sale is an open secret. As with other forms of institutionalized unfairness, there are norms and laws governing the legal and acceptable ways of buying admission. For example, donating large sums of money or funding a building to buy admission are within the norms and laws.

The fact that college admission is for sale is an open secret. As with other forms of institutionalized unfairness, there are norms and laws governing the legal and acceptable ways of buying admission. For example, donating large sums of money or funding a building to buy admission are within the norms and laws.  During Trump’s first term, a

During Trump’s first term, a  While there are safe ways to enter the United States, there are also areas of deadly desert that have claimed the lives of many migrants. Americans have left water and other supplies in these areas, for example the Unitarian Universalist Church of Tucson organized No More Deaths to provide support and reduce the number of deaths.

While there are safe ways to enter the United States, there are also areas of deadly desert that have claimed the lives of many migrants. Americans have left water and other supplies in these areas, for example the Unitarian Universalist Church of Tucson organized No More Deaths to provide support and reduce the number of deaths. While conservatives are usually

While conservatives are usually  The question “why lie if the truth would suffice” can be interpreted in at least three ways. One is as an inquiry about the motivation and asks for an explanation. A second is as an inquiry about weighing the advantages and disadvantages of lying. The third way is as a rhetorical question that states, under the guise of inquiry, that one should not lie if the truth would suffice.

The question “why lie if the truth would suffice” can be interpreted in at least three ways. One is as an inquiry about the motivation and asks for an explanation. A second is as an inquiry about weighing the advantages and disadvantages of lying. The third way is as a rhetorical question that states, under the guise of inquiry, that one should not lie if the truth would suffice. Several years ago, Singer R. Kelly was jailed and accused of sexually abusing teenagers and attempting to force his hairdresser into performing oral sex. His lawyer, Steve Greenberg, employed the “rock star rape defense”:

Several years ago, Singer R. Kelly was jailed and accused of sexually abusing teenagers and attempting to force his hairdresser into performing oral sex. His lawyer, Steve Greenberg, employed the “rock star rape defense”:  While the United States is experiencing a backlash from when Black Lives Mattered, there are still social expectations that set the allowed forms of racism. While racism is the foundation of United States immigration policy, blatantly attacking migrants for their color is considered impolite, at least for now. While there are various rhetorical approaches to attacking migrants, the focus of this essay is on the well-worn claim that migration needs to be restricted because migrants bring disease.

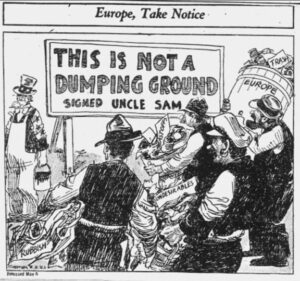

While the United States is experiencing a backlash from when Black Lives Mattered, there are still social expectations that set the allowed forms of racism. While racism is the foundation of United States immigration policy, blatantly attacking migrants for their color is considered impolite, at least for now. While there are various rhetorical approaches to attacking migrants, the focus of this essay is on the well-worn claim that migration needs to be restricted because migrants bring disease.