While Skynet is the most famous example of an AI that tries to exterminate humanity, there are also fictional tales of AI systems that are somewhat more benign. These stories warn of a dystopian future, but it is a future in which AI is willing to allow humanity to exist, albeit under the control of AI.

While Skynet is the most famous example of an AI that tries to exterminate humanity, there are also fictional tales of AI systems that are somewhat more benign. These stories warn of a dystopian future, but it is a future in which AI is willing to allow humanity to exist, albeit under the control of AI.

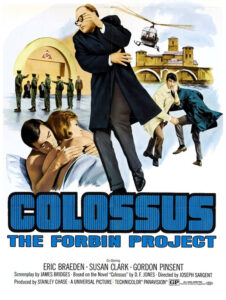

An early example of this is in the 1966 science-fiction novel Colossus by Dennis Feltham Jones. In 1970 the book was made into the movie Colossus: the Forbin Project. While Colossus is built as a military computer, it decides to end war by imposing absolute rule over humanity. Despite its willingness to kill, Colossus’ goal seems benign: it wants to create a “new human millennium” and lift humanity to new heights. While a science-fiction tale, it does provide an interesting thought experiment about handing decision making to AI systems, especially when those decisions can and will be enforced. Proponents of using AI to make decisions for us can sound like Colossus: they assert that they have the best intentions, and that AI will make the world better. While we should not assume that AI will lead to a Colossus scenario, we do need to consider how much of our freedom and decision making should be handed over to AI systems (and the people who control them). As such, it is wise to remember the cautionary tale of Colossus and the possible cost of giving AI more control over us.

A more recent fictional example of AI conquering but sparing humanity, is the 1999 movie The Matrix. In this dystopian film, humanity has lost its war with the machines but lives on in the virtual reality of the Matrix. While the machines claim to be using humans as a power source, humans are treated relatively well in that they are allowed “normal” lives within the Matrix rather than being, for example, lobotomized.

The machines rule over the humans and it is explained that the machines have provided them with the best virtual reality humans can accept, indicating that the machines are somewhat benign. There are also many non-AI sci-fi stories, such as Ready Player One, that involve humans becoming addicted to (or trapped in) virtual reality. While these stories are great for teaching epistemology, they also present cautionary tales of what can go wrong with such technology, even the crude versions we have in reality. While we are (probably) not in the Matrix, most of us spend hours each day in the virtual realms of social media (such as Facebook, Instagram, and Tik Tok). While we do not have a true AI overlord yet, our phones exhibit great control over us through the dark pattern designs of the apps that attempt to rule our eyes (and credit cards). While considerable harm is already being done, good policies could help mitigate these harms.

AI’s ability to generate fake images, text and video can also help trap people in worlds of “alternative facts”, which can be seen as discount versions of the Matrix. While AI has, fortunately, not lived up to the promise (or threat) of being able to create videos indistinguishable from reality, companies are working hard to improve, and this is something that needs to be addressed by effective policies. And critical thinking skills.

While science fiction is obviously fiction, real technology is often shaped and inspired by it. Science fiction also provides us with thought experiments about what might happen and hence it is a useful tool when considering cyber policies.

For more on cyber policy issues: Hewlett Foundation Cyber Policy Institute (famu.edu)