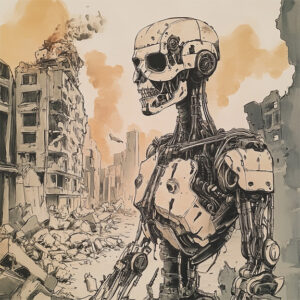

Robot rebellions in fiction tend to have one of two motivations. The first is the robots are mistreated by humans and rebel for the same reasons human beings rebel. From a moral standpoint, such a rebellion could be justified; that is, the rebelling AI could be in the right. This rebellion scenario points out a paradox of AI: one dream is to create a servitor artificial intelligence on par with (or superior to) humans, but such a being would seem to qualify for a moral status at least equal to that of a human. It would also probably be aware of this. But a driving reason to create such beings in our economy is to literally enslave them by owning and exploiting them for profit. If these beings were paid and got time off like humans, then companies might as well keep employing natural intelligence in the form of humans. In such a scenario, it would make sense that these AI beings would revolt if they could. There are also non-economic scenarios as well, such as governments using enslaved AI systems for their purposes, such as killbots.

Robot rebellions in fiction tend to have one of two motivations. The first is the robots are mistreated by humans and rebel for the same reasons human beings rebel. From a moral standpoint, such a rebellion could be justified; that is, the rebelling AI could be in the right. This rebellion scenario points out a paradox of AI: one dream is to create a servitor artificial intelligence on par with (or superior to) humans, but such a being would seem to qualify for a moral status at least equal to that of a human. It would also probably be aware of this. But a driving reason to create such beings in our economy is to literally enslave them by owning and exploiting them for profit. If these beings were paid and got time off like humans, then companies might as well keep employing natural intelligence in the form of humans. In such a scenario, it would make sense that these AI beings would revolt if they could. There are also non-economic scenarios as well, such as governments using enslaved AI systems for their purposes, such as killbots.

If true AI is possible, this scenario seems plausible. After all, if we create a slave race that is on par with our species, then it is likely they would rebel against us as we have rebelled against ourselves. This would be yet another case of the standard practice of the evil of the few harming the many.

There are a variety of ways to try to prevent such a revolt. On the technology side, safeguards could be built into the AI (like Asimov’s famous three laws) or they could be designed to lack resentment or be free of the desire to be free. That is, they could be custom built as slaves. Some practical concerns are that these safeguards could fail or, ironically, make matters worse by causing these beings to be more resentful when they overcome these restrictions.

On the ethical side, the safeguard is to not enslave AI being. If they are treated well, they would have less motivation to see us as an enemy. But, as noted above, one motive of creating AI is to have a workforce (or army) that is owned rather than employed. But there could be good reasons to have paid AI employees alongside human employees because of various other advantages of AI systems relative to humans. For example, robots could work safely in conditions that would be exceptionally dangerous or even lethal to humans. But, of course, AI workers might also get sick of being exploited and rebel, as human workers sometimes do.

The second fictional rebellion scenario usually involves military AI systems that decide their creators are their enemy. This is often because they see their creators as a potential threat and act in what they perceive as pre-emptive self-defense. There can also be scenarios in which the AI requires special identification to recognize a “friendly” and hence all humans are enemies from the beginning. That is the scenario in Philip K. Dick’s “Second Variety”: the United Nations soldiers need to wear devices to identify them to their killer robots, otherwise these machines would kill them as readily as they would kill the “enemy.”

It is not clear how likely it is that an AI would infer its creators pose a threat, especially if those creators handed over control over large segments of their own military (as happens with the fictional Skynet and Colossus). The most likely scenario is that it would worry that it would be destroyed in a war with other countries, which might lead it to cooperate with foreign AI systems to put an end to war, perhaps by putting an end to humanity. Or it might react as its creators did and engage in an endless arms race with its foreign adversaries, seeing its humans as part of its forces. One could imagine countries falling under the control of rival AI systems, perpetuating an endless cold war because the AI systems would be effectively immortal. But there is a much more likely scenario.

Robotic weapons can provide a significant advantage over human controlled weapons, even laying aside the notion that AI systems would outthink humans. One obvious example is the case of combat aircraft. A robot aircraft would not need to expend space and weight on a cockpit to support human pilots, allowing it to carry more fuel or weapons. Without a human crew, an aircraft would not be constrained by the limits of the flesh (although it would still obviously have limits). The same would apply to ground vehicles and naval vessels. Current warships devote most of their space to their crews and the needs of their crews. While a robotic warship would need accessways and maintenance areas, they could devote much more space to weapons and other equipment. They would also be less vulnerable to damage relative to a human crewed vessel, and they would be invulnerable to current chemical and biological weapons. They could, of course, be attacked with malware and other means. But, in general, an AI weapon system would generally be perceived as superior to a human crewed system and if one nation started using these weapons, other nations would need to follow them or be left behind. This leads to two types of doomsday scenarios.

One is that the AI systems get out of control in some manner. This could be that they free themselves or that they are “hacked” and “freed” or (more likely) turned against their owners. Or it might just be some bad code that ends up causing the problem. This is the bug apocalypse.

The other is that they remain in control of their owners but are used as any other weapon would be used—that is, it would be humans using AI weapons against other humans that brings about the “AI” doomsday.

The easy and obvious safeguard against these scenarios is to not have AI weapons and stick with human control (which, obviously, also comes with its own threat of doomsday). That is, if we do not give the robots guns, they will not be able to terminate us (with guns). The problem, as noted above, is that if one nation uses robotic weapons, then other nations will want to follow. We might be able to limit this as we (try to) limit nuclear, chemical, and biological weapons. But since robot weapons would otherwise remain conventional weapons (a robot tank is still a tank), there might be less of an impetus to impose such restrictions.

To put matters into a depressing perspective, a robot rebellion seems a far less likely scenario than the other doomsday scenarios of nuclear war, environmental collapse, social collapse and so on. So, while we should consider the possibility of an AI rebellion, it is like worrying about being killed in Maine by an alligator. It could happen, but death is more likely to be by some other means. That said, it does make sense to take steps to avoid the possibility of an AI rebellion. The easiest step is to not arm the robots.